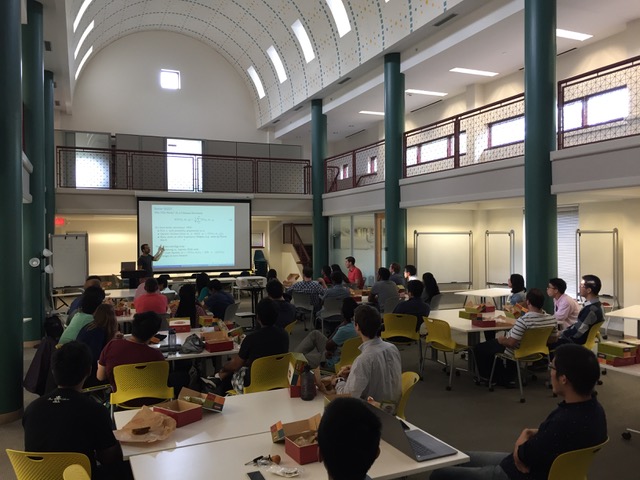

The Machine Learning Lunch Seminar is a weekly series, covering all areas of machine learning theory, methods, and applications. Each week, over 90 students and faculty from across Rice gather for a catered lunch, ML-related conversation, and a 45-minute research presentation. If you’re a member of the Rice community and interested in machine learning, please join us! Unless otherwise announced, the ML lunch occurs every Wednesday of the academic year at 12:00pm in Duncan Hall 3092 (the large room at the top of the stairs on the third floor).

The student coordinators are Michael Weylandt, Tianyi Yao, Andersen Chang, Lorenzo Luzi, and Cannon Lewis.The ML Lunch Seminar is sponsored by Marathon Oil.

Announcements about the ML Lunch Seminars and other ML events on campus are sent to the ml-l@rice.edu mailing list. Click here to join.

Machine Learning as Program Synthesis

November 20th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Swarat Chaudhuri

Please indicate interest, especially if you want lunch, here.

Abstract:

Program synthesis, the problem of automatically discovering programs that implement a given specification, is a long-standing goal in computer science. The last decade has seen a flurry of activity in this area, mostly in the Programming Languages community. However, this problem is also of interest to Machine Learning researchers. This is because machine learning models, especially for complex, procedural tasks, are naturally represented as programs that use symbolic primitives for higher-level reasoning and neural networks for lower-level pattern recognition. Such “neurosymbolic”, programmatic models have a number of advantages: they can be easier to interpret and more amenable to automatic formal certification than purely neural models, permit the encoding of strong inductive biases, and facilitate the transfer of knowledge across learning settings. The learning of such models is a form of program synthesis.

In this talk, I will summarize some of our recent work on such program synthesis problems. In particular, I will describe PROPEL, a reinforcement learning framework in which policies are represented as programs in a domain-specific language, and learning amounts to synthesizing these programs. I will also describe HOUDINI, a learning framework that uses the modularity of a functional programming language to reuse neural modules across learning tasks. Collectively, these results point to a new way in which ideas from Programming Languages and Machine Learning can come together and help realize the dream of high-performance, reliable, and trustworthy intelligent systems.

Integrative Network-Based Approaches to Understand Human Disease

November 13th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Vicky Yao (COMP)

Please indicate interest, especially if you want lunch, here.

Abstract:

The generation of diverse genome-scale data across organisms and experimental conditions is becoming increasingly commonplace, creating unprecedented opportunities fоr understanding the molecular underpinnings of human disease. However, realization of these opportunities relies on the development of novel computational approaches, as these large data are often noisy, highly heterogeneous, and lack the resolution required to study key aspects of metazoan complexity, such as tissue and cell-type specificity. Furthermore, targeted data collection and experimental verification is often infeasible in humans, underscoring the need for methods that can integrate -omics data, computational predictions, and biological knowledge across organisms. To address these challenges, I have developed diseaseQUEST, an integrative computational-experimental framework that combines human quantitative genetics with in silico functional network representations of model organism biology to systematically identify disease gene candidates. This framework leverages a novel semi-supervised Bayesian network integration approach to predict tissue- and cell-type specific functional relationships between genes in model organisms. We use diseaseQUEST to construct 203 tissue- and cell-type specific functional networks and predict candidate genes for 25 different human diseases and traits using C. elegans as a model system and focus on Parkinson’s disease as a case study. I will also talk about a related project that models the role of cell-type specificity in human disease, where I developed the first network models of Alzheimer’s-relevant neurons, in particular, the neuron type most vulnerable to the disease. We then use these models to predict and interpret processes that are critical to the pathological cascade of Alzheimer’s.

Understanding the Hardness of Samples in Neural Networks and Randomized Algorithms for Social Impact

November 6th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Beidi Chen (COMP)

Please indicate interest, especially if you want lunch, here.

Abstract:

This talk will be in two parts:

1) The mechanisms behind human visual systems and convolutional neural networks (CNNs) are vastly different. Hence, it is expected that they have different notions of ambiguity or hardness. In this work, we make a surprising discovery: there exists a (nearly) universal score function for CNNs whose correlation with human visual hardness is statistically significant. We term this function as angular visual hardness (AVH) and in a CNN, it is given by the normalized angular distance between a feature embedding and the classifier weights of the corresponding target category. We conduct an in-depth scientific study. We observe that CNN models with the highest accuracy also have the best AVH scores. This agrees with an earlier finding that state-of-art models tend to improve on classification of harder training examples. We find that AVH displays interesting dynamics during training: it quickly reaches a plateau even though the training loss keeps improving. This suggests the need for designing better loss functions that can target harder examples more effectively. Finally, we empirically show significant improvement in performance by using AVH as a measure of hardness in self-training tasks.

2) Entity resolution identifies and removes duplicate entities in large, noisy databases and has grown in both usage and new developments as a result of increased data availability. Nevertheless, entity resolution has tradeoffs regarding assumptions of the data generation process, error rates, and computational scalability that make it a difficult task for real applications. In this work, we focus on a related problem of unique entity estimation, which is the task of estimating the unique number of entities and associated standard errors in a data set with duplicate entities. Unique entity estimation shares many fundamental challenges of entity resolution, namely, that the computational cost of all-to-all entity comparisons is intractable for large databases. To circumvent this computational barrier, we propose an efficient (near-linear time) estimation algorithm based on locality sensitive hashing. Our estimator, under realistic assumptions, is unbiased and has provably low variance compared to existing random sampling based approaches. In addition, we empirically show its superiority over the state-of-the-art estimators on three real applications. The motivation for our work is to derive an accurate estimate of the documented, identifiable deaths in the ongoing Syrian conflict. Our methodology, when applied to the Syrian data set, provides an estimate of 191, 874 ± 1772 documented, identifiable deaths, which is very close to the Human Rights Data Analysis Group (HRDAG) estimate of 191,369. Our work provides an example of challenges and efforts involved in solving a real, noisy challenging problem where modeling assumptions may not hold.

Can data analytics predict and prevent the onset of seizures in epileptic patients?

October 30th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Behnaam Aazhang (ELEC)

Please indicate interest, especially if you want lunch, here.

Abstract:

A fundamental research objective in neuro-engineering is to understand the ways in which functionalities of the brain emerge from the organization of neurons into highly connected circuits. This goal is one of the most critical scientific challenges in medicine of our generation. It is also critical in our understanding of how these functionalities are disrupted because of trauma and diseases like depression, Alzheimer’s, or Epilepsy. In this presentation, I will discuss how signal processing techniques and graph- and information-theoretic tools can unravel brain’s circuit connection that underlie the onset of a seizure in epileptic patients. These tools can also provide features to predict the onset and possibly prevent seizures.

Optimizing Tensor Network Contraction

October 23rd, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Jeffrey Dudek (COMP)

Please indicate interest, especially if you want lunch, here.

Abstract:

Significant time, effort, and funding has been poured into algorithmic and hardware optimizations for machine learning, especially for neural network training and inference. Can we leverage this work to also improve the solving of other AI problems? In this talk, I will introduce tensor networks and show how tensor networks can be used to apply these hardware optimizations to a broad class in problems in AI, including model counting, network reliability, and probabilistic inference. The central algorithmic challenge for this approach is in choosing the order to process tensors within the network; I will show

how the order can be optimized using state-of-the-art tools for graph reasoning. Finally, I will show empirical evidence that this approach is competitive with existing tools in AI for model counting and probabilistic inference.

Phylogenetic Inference in the Post-genomic Era: Statistical Inference for Tree- and DAG-based Generative Models

October 16th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Luay Nakhleh (COMP)

Please indicate interest, especially if you want lunch, here.

Abstract:

Phylogenetic inference is mostly about estimating evolutionary histories from molecular sequence data. An evolutionary history is a generative model that consists of two components. The discrete component, which is often a rooted tree or directed acyclic graph (DAG) topology, whose leaves are labeled by a set of taxa (species, genes, cells, …). The continuous component is the set of evolutionary parameters (positive real numbers) that label the nodes and edges of the tree or DAG. The probability distribution that this model defines on sequence data is very complex and not amenable to standard manipulations that one encounters in machine learning textbooks. For example, integration of the probability density and derivatives of the likelihood function cannot be done analytically.

In this talk, I will describe two different areas of phylogenetics that my group is working on. The first concerns inferring DAGs from the genomes of different species. The second concerns the inference of trees from single cancer cells in a patient. Both cases involve evolutionary mixture models and Markov models of sequence evolution. In the case of DAGs from species genomes, the probability distribution is given a model adopted from population genetics. In the case of cancer cell trees, the model consists of clusters defined by a tree structure. Inference in both cases involves walking in a space of varying dimensions; therefore, to conduct Bayesian inference in both cases, we employ reversible-jump Markov chain Monte Carlo samplers.

Machine Learning & Computational Vision Sensors

October 9th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Vivek Boominathan (ELEC)

Please indicate interest, especially if you want lunch, here.

Abstract:

Traditional imaging devices capture a photograph, which is then processed through machine learning algorithms to arrive at application-specific inferences such as object detection, tracking, recognition, and others. Thus, the optics in a traditional vision system is entirely unaware of the specifics of post-capture machine learning. In contrast, we suggest that the optical front-end in machine vision systems should be considered and exploited as an additional degree of computational freedom. Incorporating optical design has many benefits, such as improved performance, reduction in physical form factor, low latency, and low power. In this talk, we will dive into the optics design that assists and goes hand in hand with the machine learning task at hand. We will complete the talk by introducing and describing the data-driven approaches to jointly design the optics along with machine vision algorithms/CNN.

Noise robustness in DNNs and leveraging inductive bias for learning without explicit human annotations

October 2nd, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Fatih Furkan Yilmaz (STAT)

Please indicate interest, especially if you want lunch, here.

Abstract:

Classification problems today are typically solved by first collecting examples along with candidate labels, second obtaining clean labels from workers, and third training a large, overparameterized deep neural network on the clean examples. The second, labeling step is often the most expensive one as it requires manually going through all examples. In this talk, we will discuss skipping the labeling step entirely and propose to directly train the deep neural network on the noisy candidate labels and early stop the training to avoid overfitting. With this procedure we’ll exploit an intriguing property of large overparameterized neural networks: While they are capable of perfectly fitting the noisy data, gradient descent fits clean labels much faster than the noisy ones, thus early stopping resembles training on the clean labels. We’ll first discuss some of the recent studies about the noise robustness of NNs that provide theoretical and practical motivation for this property and present experimental results of the state-of-the-art models for the widely considered CIFAR-10 classification problem. Our results show that early stopping the training of standard deep networks such as ResNet-18 on part of the Tiny Images dataset, which does not involve any human labeled data, and of which only about half of the labels are correct, gives a significantly higher test performance than when trained on the clean CIFAR-10 training dataset, which is a labeled version of the Tiny Images dataset, for the same classification problem. In addition, our results show that the noise generated through the label collection process is not nearly as adversarial for learning as the noise generated by randomly flipping labels, which is the noise most prevalent in works demonstrating noise robustness of NNs.

Prediction Models for Integer and Count Data

September 25th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Daniel Kowal (STAT)

Please indicate interest, especially if you want lunch, here.

Abstract:

A challenging scenario for prediction and inference occurs when the outcome variables are integer-valued, such as counts, (test) scores, or rounded data. Integer-valued data are discrete data and exhibit a variety of complex distributional features including zero-inflation, skewness, over- or under-dispersion, and in some cases may be bounded or censored. To meet these challenges, we propose a simple yet powerful framework for modeling integer-valued data. The data-generating process is defined by Simultaneously Transforming and Rounding (STAR) a continuous-valued process, which produces a flexible family of integer-valued distributions. The transformation is modeled as unknown for greater distributional flexibility, while the rounding operation ensures a coherent integer-valued data-generating process. Despite their simplicity, STAR processes possess key distributional properties and are capable of modeling the complex features inherent to integer-valued data. By design, STAR directly builds upon and incorporates the models and algorithms for continuous-valued data, such as Gaussian linear models, additive models, and Bayesian Additive Regression Trees. Estimation and inference are available for both Bayesian and frequentist models. Empirical comparisons are presented for several datasets, including a large healthcare utilization dataset, animal abundance data, and synthetic data. STAR demonstrates impressive predictive distribution accuracy with greater flexibility and scalability than existing integer-valued models.

Leveraging structure in cancer imaging data to predict clinical outcomes

September 18th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Souptik Barua (ELEC)

Please indicate interest, especially if you want lunch, here.

Abstract:

Immunotherapy and radiation therapy are two of the most prominent strategies used to treat cancer. While both these treatments have succeeded in removing the disease in many patients and cancer types, they are known to not work well for all patients, sometimes even leading to adverse side effects. There is thus a critical need to be able to predict how patients might respond to these therapies and accordingly design optimal treatment plans. In this talk, I describe data-driven frameworks that leverage structure in two types of cancer imaging data (multiplexed immunofluorescence or mIF images, and CT scans) to predict clinical outcomes of interest. In the case of mIF images, I use ideas from spatial statistics and functional data analysis to design metrics that describe the spatial distributions of immune cells in a tumor. i then show that these metrics can predict outcomes such as survival and risk of progression in pancreatic cancer. In the case of CT scans, I use a functional data analysis technique to capture temporal changes in CT scans captured at multiple time points, and use that to predict two clinical outcomes a) if patients undergoing radiation treatment are likely to have a complete response, b) if patients are going to develop long-term side effects of radiation treatment such as osteoradionecrosis.

Strong mixed-integer programming formulations for trained neural networks

September 11th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Joey Huchette (CAAM)

Please indicate interest, especially if you want lunch, here.

Abstract:

We present mixed-integer programming (MIP) formulations for high-dimensional piecewise linear functions that correspond to trained neural networks. These formulations can be used for a number of important tasks, such as: 1) verifying that an image classification network is robust to adversarial inputs, 2) designing DNA sequences that exhibit desirable therapeutic properties, 3) producing good candidate policies as a subroutine in deep reinforcement learning algorithms, and 4) solving decision problems with machine learning models embedded inside (i.e. the “predict, then optimize” paradigm). We provide formulations for networks with many of the most popular nonlinear operations (e.g. ReLU and max pooling) that are strictly stronger than other approaches from the literature. We corroborate this computationally on image classification verification tasks, where we show that our formulations are able to solve to optimality in orders of magnitude less time than existing methods.

Data Integration: Data-Driven Discovery from Diverse Data Sources

September 4th, 12:00 pm- 1:00 pm in DCH 3092

Speaker: Genevera Allen (ECE)

Please indicate interest, especially if you want lunch, here.

Abstract:

Data integration, or the strategic analysis of multiple sources of data simultaneously, can often lead to discoveries that may be hidden in individual analyses of a single data source. In this talk, we present several new techniques for data integration of mixed, multi-view data where multiple sets of features, possibly each of a different domain, are measured for the same set of samples. This type of data is common in heathcare, biomedicine, national security, multi-senor recordings, multi-modal imaging, and online advertising, among others. In this talk, we specifically highlight how mixed graphical models and new feature selection techniques for mixed, mutli-view data allow us to explore relationships amongst features from different domains. Next, we present new frameworks for integrated principal components analysis and integrated generalized convex clustering that leverage diverse data sources to discover joint patterns amongst the samples. We apply these techniques to integrative genomic studies in cancer and neurodegenerative diseases to make scientific discoveries that would not be possible from analysis of a single data set.

Photo Gallery: